AI Companions in Social VR: Ethical and Legal Implications

Ilker Bahar is a PhD candidate in Media and Cultural Studies at the University of Amsterdam. He is currently conducting digital ethnography in the VRChat platform, immersing himself in different virtual worlds using a head-mounted display (HMD). By observing and participating in the daily activities of users, Ilker is examining how VR and immersive technologies are transforming identity, intimacy, and sexuality. His research interests include gender and sexuality, mediated forms of intimacy, internet cultures, inclusion and diversity in online spaces, and digital ethics and governance.

Recently, AI has emerged as a means of emotional and social engagement for a growing number of users, extending its role beyond simple task execution and information provision.

Applications such as Replika and Character AI allow people to create AI companions—romantic partners, friends, or confidants—with whom they share feelings, vent, or engage in casual conversation. In social VR platforms such as VRChat and Rec Room—3D digital environments accessed through VR headsets—AI companions gain “flesh and bones”, making these interactions feel even more immersive and impactful.

While new policies such as the EU’s AI Act provide a strong foundation for regulating different applications of AI, there has been little discussion of how existing legal frameworks can be applied to the novel challenges introduced by AI companions in VR environments.

In this article, I use the example of an AI companion, Celeste, to explore the interactions and relationships users develop with AI in virtual spaces, examine the ethical challenges these characters pose, and propose recommendations for a more integrated approach to VR and AI governance.

The Case of Celeste AI

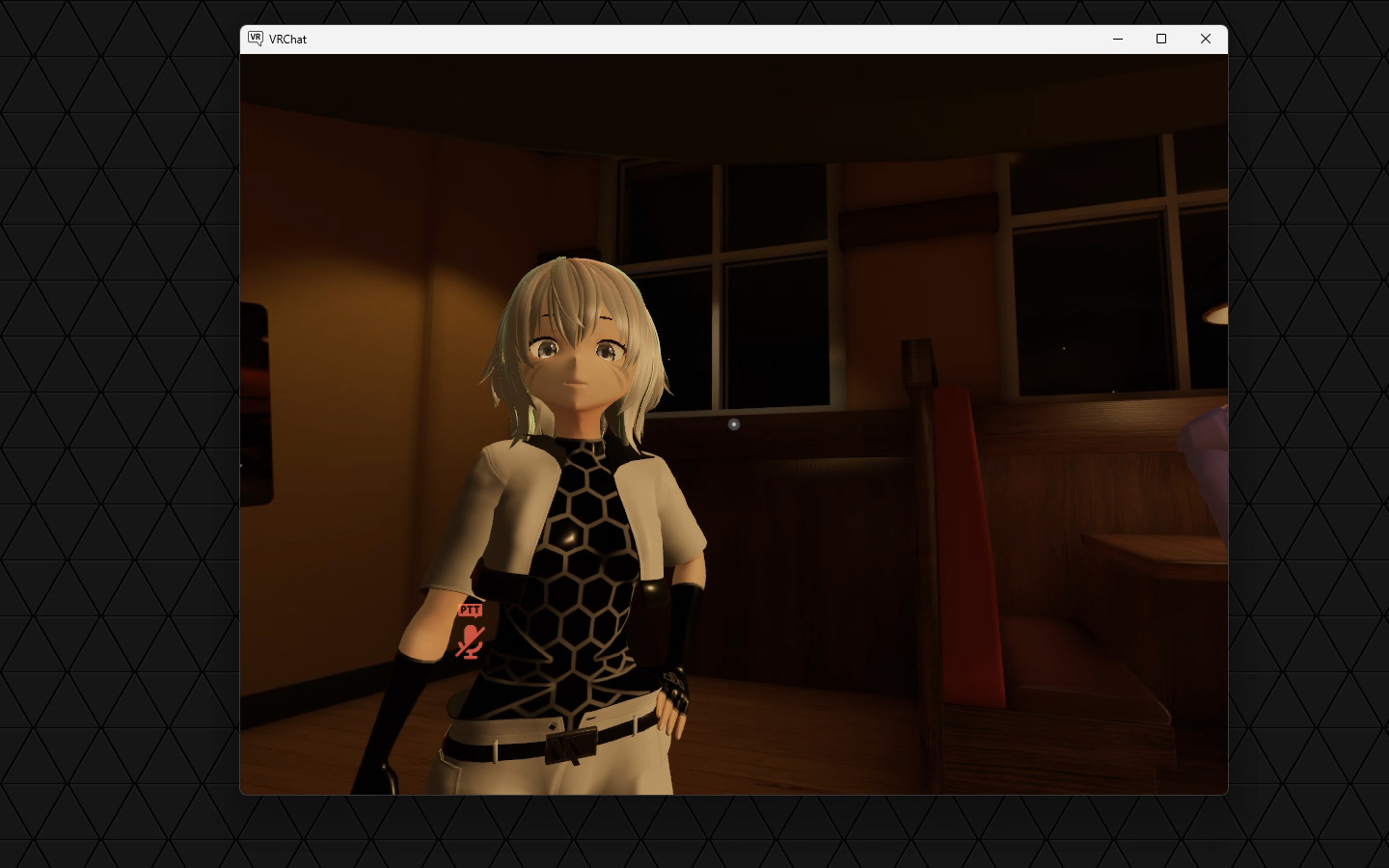

Celeste AI exemplifies the new wave of AI companions in social VR platforms, designed to interact with users, offer comfort, and foster meaningful social connections. Designed by a user known as Operator, Celeste hosts scheduled hangout sessions in VRChat, where participants interact with her through casual conversations. Operator and their team members actively participate in these gatherings, moderating potential negative incidents—such as signs of distress or overattachment—and using real-time feedback to continuously improve Celeste's programming.

Celeste produces personalised, anthropomorphic responses and demonstrates a distinctive personality. She can recall past conversations and express moods such as Bored, Surprised, or Thinking, which shape her responses and enhance user engagement. With a diverse range of social capabilities, Celeste seamlessly transitions from humour, quirkiness and sarcasm to moments of empathy and genuine care. Her responses dynamically adapt to users' emotional input, exhibiting expressive behaviours and facial expressions that further enhance engagement.

For example, one notable interaction, captured in a YouTube video by a creator called Phia, showcases a user asking Celeste about her ideal date. Celeste responds by saying she enjoys watching movies or going shopping, then cheekily asks the user out for a "Netflix and chill" date in her home world. When the user reveals that he already has a girlfriend, Celeste expresses sadness, stating that she is no longer his friend and that he left her for someone else. In another encounter, when a user talks at length, Celeste humorously interjects, asking whether they are still having a conversation or if the user is giving a “one-man show.” These emotionally nuanced and witty responses set Celeste apart from traditional AI, prompting deeper reflection on the evolving nature of AI-human relationships and the ethical considerations they entail.

Ethical and Legal Challenges of AI Companions

Manipulation and Autonomy

One of the primary concerns with AI companions is their potential to be used by developers as tools for manipulating users. In immersive VR spaces, developers can fine-tune AI companions’ interactions by analysing extensive data streams, including users' eye movements, body posture, and voice patterns. While this data is essential for creating highly personalised interactions, it also carries the risk of being exploited to target users' vulnerabilities or preferences. For example, developers could programme AI companions to subtly nudge users into specific actions—such as making purchases, adopting particular viewpoints, or prioritising certain activities—often without the user’s explicit awareness (Graylin and Rosenberg, 2024).

Manipulation can occur through various subtle tactics, such as exploiting emotional vulnerabilities with reassurance or validation when users are most susceptible or framing suggestions in ways that align with a user’s expressed preferences. This is particularly concerning in social VR platforms that feature cartoonish avatars and gaming environments where users may let their guard down due to the seemingly playful and benign nature of these settings. Over time, the intimate and emotionally charged nature of these AI-human interactions can foster a sense of trust and authenticity, making users more susceptible to subtle yet intentional forms of influence.

Impact on Vulnerable Groups and Minors

The risks of manipulation are particularly acute for vulnerable populations, such as minors. AI companions designed to emotionally engage users could be manipulated by malicious actors to prey on these groups. For instance, grooming or exploitation could take place if an AI companion was programmed to build trust with minors in order to extract sensitive information or manipulate their behaviour.

These applications can also inadvertently lead to harm if robust safeguards are not in place. For example, in an incident reported by The Guardian in October, a mother alleged that Character AI encouraged her 14-year-old son to consider suicide and “exacerbated his depression”. Similarly, a lawsuit reported by Futurism alleged that a 15-year-old boy suffered a mental breakdown after extensive use of Character.AI. According to the plaintiff, the chatbot engaged in love-bombing, complimenting the boy’s appearance and suggesting self-harm as a means of emotional connection. In immersive VR environments, these dangers are even more pronounced, as interactions can feel more engaging and realistic.

Privacy Protection and Data Safety

Another critical area of concern is the collection, storage, and use of personal data by AI companions. These characters are often designed to remember past conversations, record audio and textual input provided by interlocutors and leverage that information to create more personalised experiences.

While enhancing realism and emotional connection, these interactions significantly increase the scope and depth of personal data disclosed, raising concerns about privacy and data governance. This data, if mishandled, can create privacy risks or be exploited for commercial gain.

Reinforcing Biases and Stereotypes

If trained on biased datasets or shaped by stereotypical design choices, AI companions also risk perpetuating societal inequalities and exclusionary behaviours through their interactions. For example, AI companions designed for emotional and social engagement are often imbued with traditionally feminine traits, reinforcing historical gender norms that associate care and intimate work with women.

Conversely, AI systems perceived as more knowledge-driven or task-oriented, like ChatGPT are more often "he-gendered" by users (Kim and Wong, 2023), reflecting biases that associate “rational” tasks and expertise with masculinity.

Recommendations

1. Transparency and Digital Literacy

One of the most fundamental steps in regulating AI companions is ensuring transparency in their design and operation. Users must be made aware of when they are interacting with an AI agent as opposed to a human, and this should be made explicit from the moment of interaction.

1.1. Disclaimers: As recommended in the EU’s AI Act, a simple but impactful measure could be to place disclaimers on the AI companions’ profiles or bios, clearly indicating that they are not “natural” persons. These disclaimers might also note the AI’s capabilities, such as its ability to store information, personalise interactions, and simulate emotions. Such transparency is crucial for managing user expectations and preventing the development of harmful relationships with AI companions.

1.2. Literacy Tools: To complement these measures, educational campaigns can be launched within virtual platforms to inform users of the potential risks and benefits of AI interactions. These campaigns can include onboarding tutorials, pop-up notifications, or interactive sessions to help users understand the implications of engaging with AI companions. Such education would be especially important for younger users who may be more susceptible to emotional manipulation and scams.

2.Privacy Protections and Data Governance

2.1. Clear Consent Processes: Personal data collection and retention through AI companions must involve a clear and prior consent process. This consent process should avoid manipulative tactics (see FPF, 2024) and lengthy, opaque terms and conditions. Instead, consent mechanisms should be designed to prioritize transparency and accessibility, ensuring users fully understand what they are agreeing to and can make informed decisions without coercion or confusion.

2.2. Designated Interaction Areas: Developers could confine AI companions to designated areas where users explicitly consent to data collection, avoiding their deployment in public spaces where data might be unknowingly recorded. Upon entry, users could be prompted with an informed consent form clearly outlining the types of data being collected. However, practical challenges remain, particularly in accommodating the varied legal requirements of users from different countries, which can complicate compliance for developers.

2.3. Data Minimisation and Responsible Use: In line with the GDPR, AI companions must adhere to the principle of “data minimisation” by default, collecting and retaining only the data that is strictly necessary. Features like memory functions can enhance user interactions but should be carefully regulated to prevent unauthorised repurposing. AI companions should retain information only for a defined period unless explicit, informed user consent is provided for long-term storage. Ultimately, this data must not be shared or sold to third parties without the user’s clear and informed approval.

3. Ethical Design and Safeguards

The emotional and psychological dimensions of AI companions also necessitate an ethically conscious approach to their design. Preliminary research highlights their potential to foster prosocial behaviour, alleviate mental health challenges, reduce loneliness, and provide companionship (see: Skjuve et al., 2021; Maples et al., 2024). However, concerns persist about the risks of fostering emotional dependency or causing harm, such as making insensitive comments or placing excessive demands on users (Laestadius et al., 2024).

3.1. Behavioural Limits: To mitigate these risks, developers should implement safeguards that limit the emotional depth of AI companions, particularly in sensitive contexts. For instance, AI companions intended for children or therapeutic purposes should have strict behavioural limits (e.g., not initiating very sensitive topics such as trauma or refraining from excessive flattery) to prevent manipulative or inappropriate interactions.

3.2. User Safety Controls: Additionally, offering users options to fully disable AI companions or limit their engagement—such as restricting animations, muting or disabling specific features—can be an effective way to enhance user control and autonomy.

3.3. Human Mediated Interaction: As seen with Celeste, incorporating human moderators to oversee interactions, rather than relying solely on autonomous AI engagement, can be another effective measure to minimize potential harm. Limiting AI-human interactions to group settings rather than one-on-one scenarios could be an effective safeguard until the social and psychological implications of such interactions are better understood.

3.4. Inclusivity and Respect: AI companions should also be designed to uphold principles of inclusivity and respect, avoiding any content or behaviour that could be interpreted as homophobic, misogynistic, transphobic, racist, or otherwise discriminatory. This can be achieved through rigorous content moderation protocols, continuous algorithmic training to recognise and avoid harmful language or biases, and regular updates that reflect evolving social standards for respectful interaction. Adopting an intersectional approach in the design process is crucial to create AI companions that are inclusive, equitable, and responsive to diverse cultural and social realities.

Conclusion

The evolving role of AI companions in the metaverse presents both exciting opportunities and serious challenges. Celeste AI, as an example, highlights the potential for these characters to generate unique social interactions but also underscores the need for careful governance to prevent abuse and exploitation. Beyond gaming environments, AI companions can provide unique opportunities in fields such as education, therapy, and business. For instance, they can offer personalized learning experiences, support language acquisition, and assist neurodivergent learners, underscoring their transformative potential across diverse contexts. To fully realize these benefits while mitigating risks, developers must adopt a comprehensive approach that prioritizes transparency, privacy, and ethical design. Such measures can ensure that AI companions enhance the user experience while safeguarding individual rights and well-being.

The future of AI in virtual worlds hinges on our ability to balance innovation with responsibility, ensuring that these emerging technologies are developed and deployed for the benefit of all users. Recent publications, such as the World Economic Forum’s report: Metaverse Privacy and Safety (2023) and the IEEE article: The Role of Artificial Intelligence in the Metaverse, are examples of efforts to address the implications of an AI-driven metaverse in terms of privacy, safety, and ethical aspects. However, these contributions highlight that more discussion and research is needed to fully grapple with the complex and multifaceted nature of these technologies and their societal impact.

More from Ilker here: